Everything breaks at scale

This is a video of a talk I gave at Beyond Tellerrand in Dusseldorf in May, 2022. A text version with the slides appears below.

The last time I spoke at Beyond Tellerrand I introduced myself by saying “I really like strange rabbitholes on the internet, the stranger the better.”

I’ve always loved a mystery. I grew up reading Trixie Belden and Encyclopedia Brown.

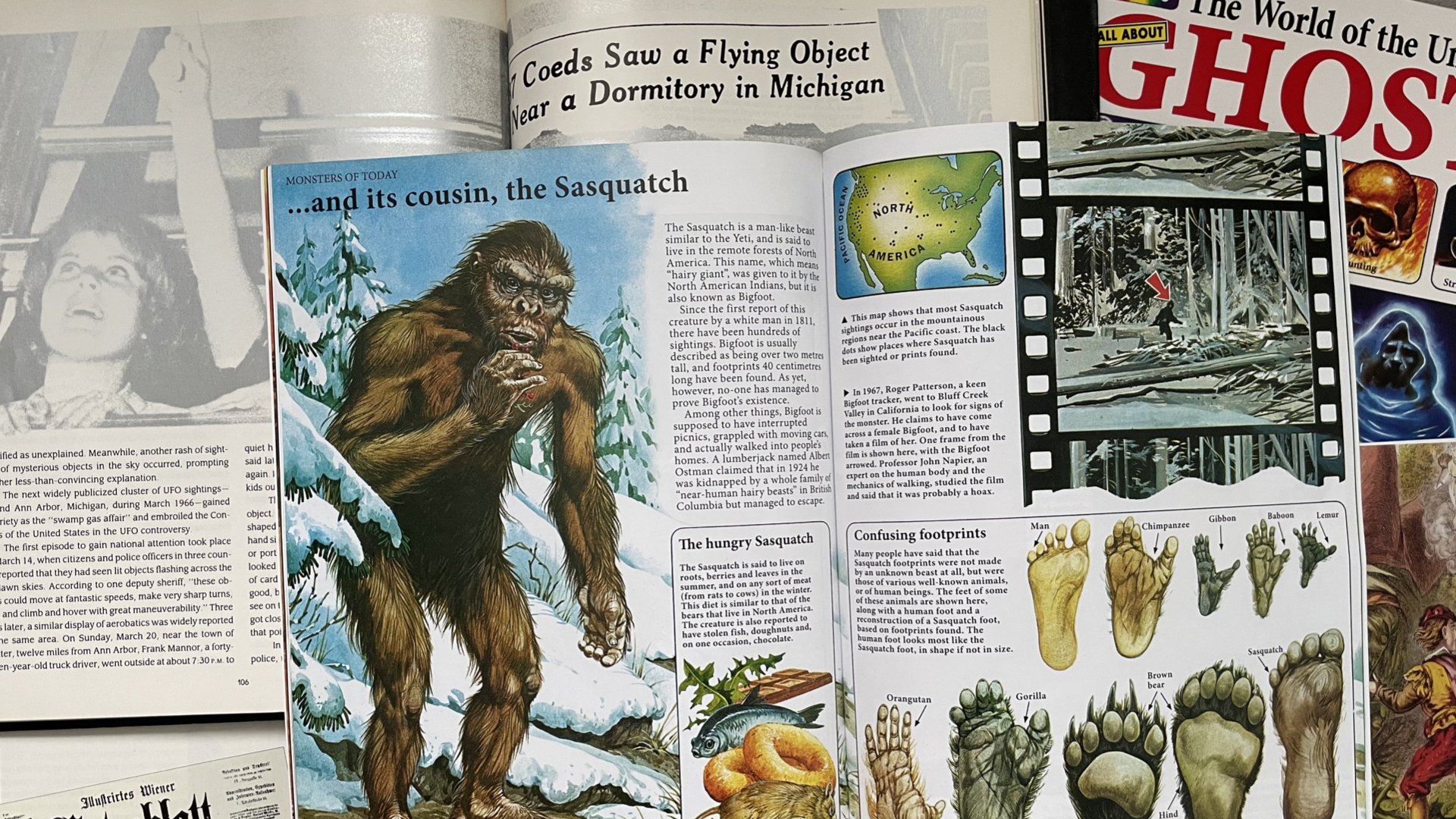

Dinosaurs held my attention as a child because archaeologists pieced their lives together from evidence they dug out of the ground like a giant puzzle. I adored books about UFOs, the Loch Ness Monster, and Bigfoot. There was nothing I loved more than something unexplained.

By the time I got to university, that fascination hadn’t let up. I dug into the classics, reading books about the Kennedy assassination that put forward the kind of fringe theories that never withstand close scrutiny but are somehow still so fascinating you find yourself telling other people about them. Theories about faked autopsy results and second coffins and god knows what else.

This was all before the internet, because I am extremely old. When you couldn’t google an answer, you would tell stories like this, over drinks in a bar. There was no way to prove or disprove anything in that moment. It was almost an oral tradition. And there was certainly no way for the ideas to spread exponentially. You had to go looking for this stuff, buying out-of-print second-hand books or putting things on reserve at the library.

Eventually, I guess, I grew out of most of it. I watched The X-Files on television and stopped wondering whether people who thought they were alien abductees were telling the truth.

Two things happened in the 2000s that really changed the way I thought about this: the first was discovering 9/11 “truthers” and the second was discovering Lord of the Rings “tinhats”. At the time, I didn’t realise how connected those things were.

I have no recollection now how I came across the infamous Loose Change documentary that kicked off the conspiracy theories about the horrific tragedy that happened on 9/11 — it was before Youtube, so I assume it was through one of the community websites I regularly visited, like Metafilter. But as I watched it I was absolutely transfixed, and it led me to seek out weirder and weirder theories about what might have happened on 9/11. To be very clear, this wasn’t because I believed them, but because the more outlandish the claim, the more I desperately wanted to understand how anyone could. How could you look at video footage of that day, zoomed in and slowed down, and reach the conclusion that people weren’t fighting for their lives. That instead they were puppets, or holograms, or government actors. And how could you believe something so divorced from reality, and then choose to share it and try to convince other people of the same.

Meanwhile, the Lord of the Rings movie trilogy was being made in New Zealand while I was working overseas. Each Christmas, as a new film was released, it was a rite of passage to go and see it in the cinema and just feel deeply homesick for my country. I never really got into the fandom side of the movies, though the fandom was huge and active at the time, until I started to learn more about their RPF community.

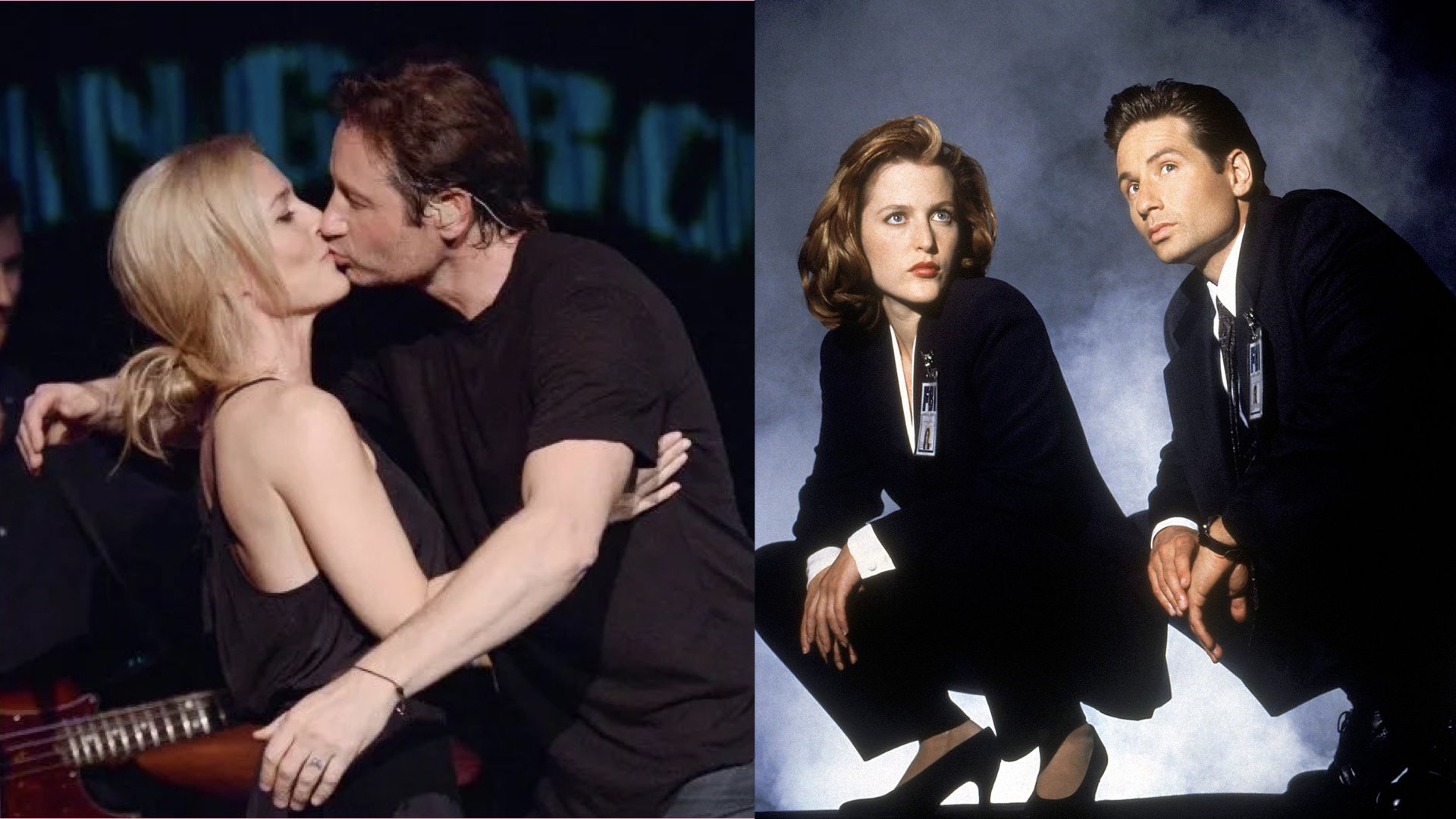

RPF (short for “real person fandom” or “real person fic”) refers to fandom and fanfiction stories about the actors in a show or movie, rather than their characters. About musicians, or sportspeople — real people.

Instead of writing stories about Mulder and Scully and their adventures fighting monsters, an RPF fan might write stories about Gillian Anderson and David Duchovny and their adventures in their trailers when shooting wrapped for the day. When I first started hanging out in fandom spaces, there was a much more serious taboo around RPF. Many fans saw it as disrespectful to the performers, breaking a fourth wall, and so on. But as more and more behind the scenes content was deliberately marketed to fans, and as social media led to actors’ and musicians’ day-to-day lives becoming “content” in and of itself, those taboos started to break down.

The Lord of the Rings fandom was absolutely ripe for RPF, because the cast had spent so much time together, far away from home, and the behind-the-scenes content made it look like they’d had an incredible summer-camp time of it making movies and goofing off together. Fans loved seeing it. Some fans loved imagining they’d been having an extra good time together.

And a small group of fans went a little further. It wasn’t just that they imagined actors like Dominic Monaghan and Elijah Wood might have made a cute couple, it was that they were convinced they were, in fact, a couple. A secret couple. Doomed to keep their love from the public at the insistence of the mysterious Powers That Be.

If you’ve watched my first Beyond Tellerrand talk, you’ll be starting to recognise a theme. These fans weren’t content to just appreciate some cute boys who had a bromance off-screen. They constructed elaborate theories and timelines, attributed evil motives to corporate overlords who were preventing their Great Love from being known to the world, and looked for secret signs and signals that their faves were letting them know they saw them and appreciated their support.

Plagued by their extreme behaviour, the Lord of the Rings fandom gave these people a nickname: tinhats. Short for the tinfoil hats we’d already come to recognise those scared of aliens and government conspiracies would wear to protect themselves from all manner of unprovable outside threats.

From then on, conspiracy theorists in every fandom were referred to the same way. And unfortunately tinhats pop up in more or less every fandom: convinced that Benedict Cumberbatch’s marriage is a sham and his children are fake…

…that the stars of Outlander are secretly living together…

…that ice dancers Scott Moir and Tessa Virtue have chemistry on the ice because of their great love off it.

Looking back, I used to think tinhats were amusing. It’s part of why using the Larry Stylinson shippers as a hook for that first talk was entertaining. The confusing part for people was not that Louis and Harry might have made a cute couple. It was … wait, people actually believe this is real?

They do. They still do, seven years after One Direction broke up, six years after Harry and Louis were last seen together, after Louis had a child, and got back together with his long term girlfriend. They still believe it. They gather online and post evidence and speculate about theories and they rail against the mysterious powers and ironclad contracts holding these two wealthy white men back from declaring their Secret Love.

These people are conspiracy theorists. They’re no different than the 9/11 Truthers. And if there’s anything that the last few years has taught us, it’s that conspiracy thinking isn’t amusing and it isn’t harmless.

As conspiracy thinking has risen to a truly horrifying level of prominence, I’ve spent time thinking about what brings these people together, and how we—as the people who build for the internet— have all contributed to the problem. How we need to address where this thinking comes from, the ways in which the tools and the platforms we’ve created and contributed to have amplified and accelerated its spread, and what we can do now to change direction.

Conspiracy thinking isn’t new.

You can read rafts of work about the ways in which people have believed things to be other than what they have seemed all the way back to speculating about what Nero was doing while Rome burned. Did he really set the fire himself?

Part of the problem with conspiracy thinking is that there is, at the core, always at least a kernel of truth. Conspiracists are not totally detached from reality. History has demonstrated over and over that, yes, politicians and leaders do lie.

Powerful figures do attempt to cover up wrongdoing.

Some paparazzi shots do seem staged.

Distrust in authority isn’t necessarily irrational. It’s too easy to dismiss the people involved as “crazy”, but when you look at why someone would continue to surround themselves with ideas that are almost laughably false, the reasons are extremely normal.

So none of this is new. Of course we all know that what is new is the way in which the internet has empowered and amplified these theories, made the spread of misinformation commonplace, and provided homes and platforms for these people and their ideas.

We know how people get exposed to conspiracy thinking. You’ve all read a thousand articles about algorithms and amplification and how social media is rotting our brains. I’m more interested in a different question. Why do people stay?

I think there are three things at the core of this, three deep-seated human needs, that are being met by conspiracy theories.

Community, purpose and dopamine.

The first one is the most obvious. As human beings we crave being in community. Never has the evidence for that been more stark than over the two years of the pandemic when we’ve been separated from friends and loved ones, and so dependent on the response of the people around us.

Over the years as I’ve paid more attention to the way people come to embrace celebrity conspiracy theories, the thing that strikes me over and over is that what they’re really enjoying is being part of a community. Sure, they come online each day in part to discover the latest tidbits, but also because the people they’re sharing them with have become friends, comrades in arms, people they believe care about them.

There’s a wealth of literature written about the ways in which over the last few centuries our real-world community structures have broken down. As we moved from small villages to cities. As we moved out of traditional religious structures - parishes. As we got further away from extended family.

It’s been over twenty years since Putnam wrote Bowling Alone in which he argued that we have become increasingly disconnected from family, friends, neighbours, and our democratic structures. That changes in work, family structure, suburban life, television, computers, and other factors have contributed to a decline in civic participation. Voluntary membership of clubs, like bowling leagues, he argued was a healthy indicator of the kind of trust and reciprocity we need for healthy communities. Decline in those memberships was a bad sign.

And in that time his theory about the decline in our civic participation has been debated widely. Have memberships really declined, or have our tastes in social activities changed? Are we signing fewer petitions because we care less about politics or because we’re getting involved in politics in other ways?

Regardless – it remains true that we still crave that sense of community. And when we can’t find it around us, through isolation or economic circumstance or a global pandemic, we look for it online.

And a growing number of people find it surrounded by conspiracists.

And as people spend more and more time in these circles, and convince themselves of increasingly outlandish and socially unacceptable ideas, they further weaken their ties with the people in their offline lives.

Writing about Flat Earthers in The Atlantic, Kelly Weill said:

The loss foregrounds practically every conversation at flat-Earth meetups, so common that some describe themselves with the language of persecuted minorities: Announcing one’s belief is referred to as “coming out,” a term most commonly associated with the LGBTQ community. Separated from loved ones, many then find themselves trapped inside the theory with the only other people who will believe them.

Eventually, a conspiracy community becomes your only community.

The second thing that draws people together is a sense of purpose. United in a common cause - standing for something you think is important, or against something you think is reprehensible.

You’re not alone at your keyboard looking at photos of celebrities, you’re fighting for a young gay couple in love, closeted by their evil managers.

You’re holding up signs at their concerts to tell them you support them.

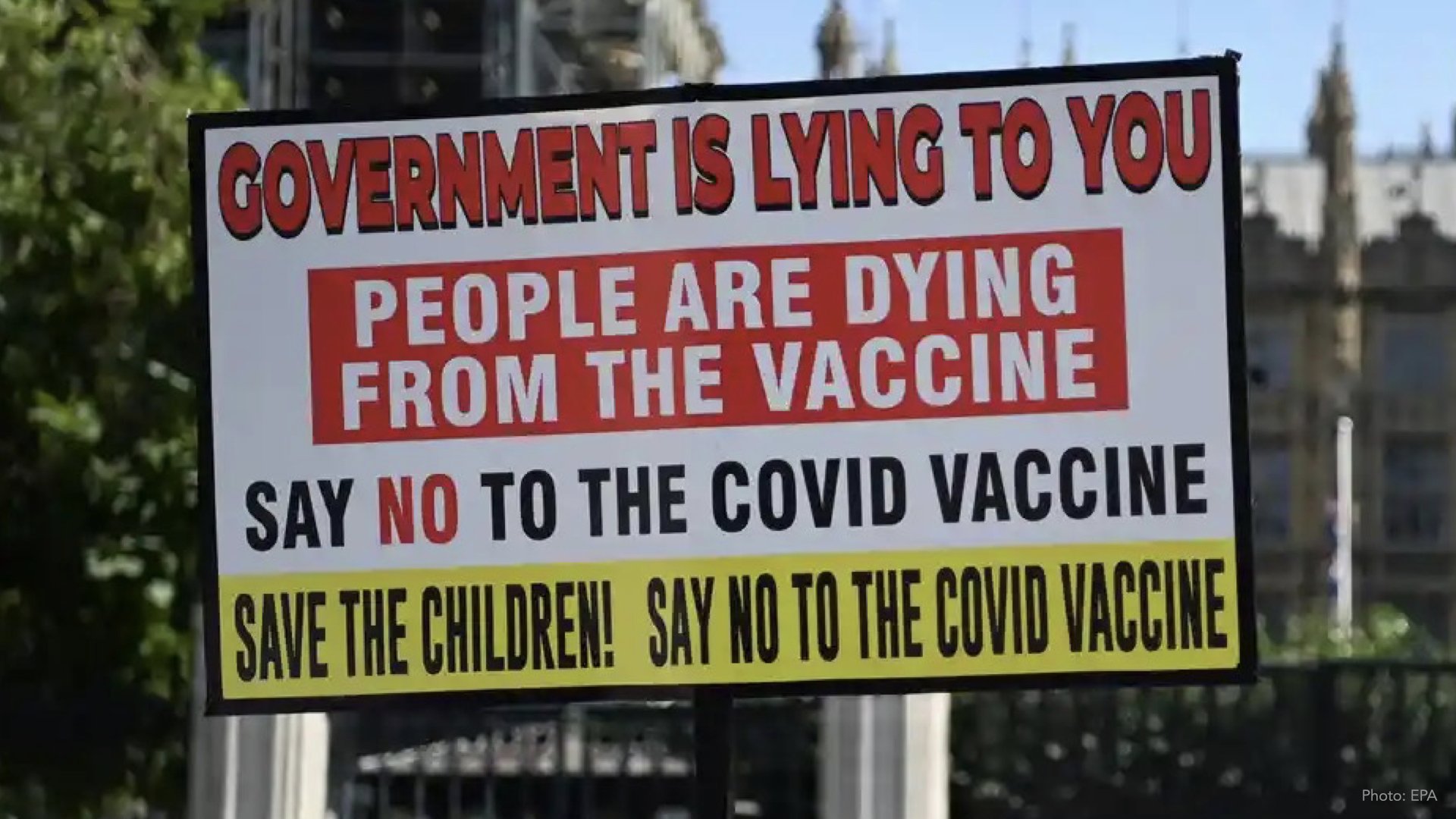

And there’s rationality in that too – if, as conspiracists increasingly believe, children genuinely were being trafficked by satanists, we’d all have a duty to act! If the vaccines really were leading to millions of hidden deaths, we’d feel compelled to do something about that too.

Larries see themselves as crusading for LGBTQ rights. QAnon and anti-vaxxers see themselves as crusading for children’s wellbeing and an end to shadowy government machinations.

For many people who find themselves in these spaces the present moment is maybe the first time they’ve found themselves with a lack of control, through economic inequality, government pandemic responses, mandates and lockdowns.

Recently Anne Helen Peterson wrote about this saying that “white, straight people with American passports are now feeling the same sort of societal precarity that has long been the norm for people without those privileges…Put differently: white people who have faced little adversity in their lives are beginning to grapple with what it means to suffer without cause, for reasons utterly outside of your control, in a way that feels abjectly unfair, with little or no recourse.”

Conspiracy theories have seemed to flourish in these times where people feel especially powerless to directly influence world events. People reaching for a sense of purpose when they feel especially helpless or overwhelmed by the state of the world.

Conspiracy theorists believe they’re on the side of good. That doesn’t make what they’re doing okay, and it certainly doesn’t mean we should tolerate it, but we have to do the work to understand it, and to understand how we got here.

The third thing that keeps people in these environments is something we’re all very familiar with. Dopamine.

We all know that our social infrastructure online is built around engagement. Facebook’s founders have admitted building the app around our need for this brain chemistry reward, and every other social platform has followed suit, to keep us distracted and hooked.

And look, I get it. I write fanfiction, and I’ve written original fiction, and I tell you – writing an unpublished novel is nowhere near as satisfying as the daily emails from AO3 telling me that people have liked and reviewed my fan works.

So if you’re part of a community where that dopamine hit is baked in, you can see why it causes people to stay.

For conspiracists, very quickly, being involved in these kinds of groups can lead to someone having a kind of insider influence. When the Facebook post you make or the YouTube video you film starts to garner likes and comments, it provides a feedback loop that you want more of. It’s human nature to be delighted that your ingroup likes what you have to say. And even if you have nothing to add, there’s still the buzz of reposting, reblogging, sharing. Even as a “lurker” you feel like you have a role to play. You’re a “digital soldier.”

There’s also a real sense of this kind of reward that comes in trying to solve a mystery. In looking for answers in signs and symbols. I get it – I played one of the first online Alternate Reality Games.

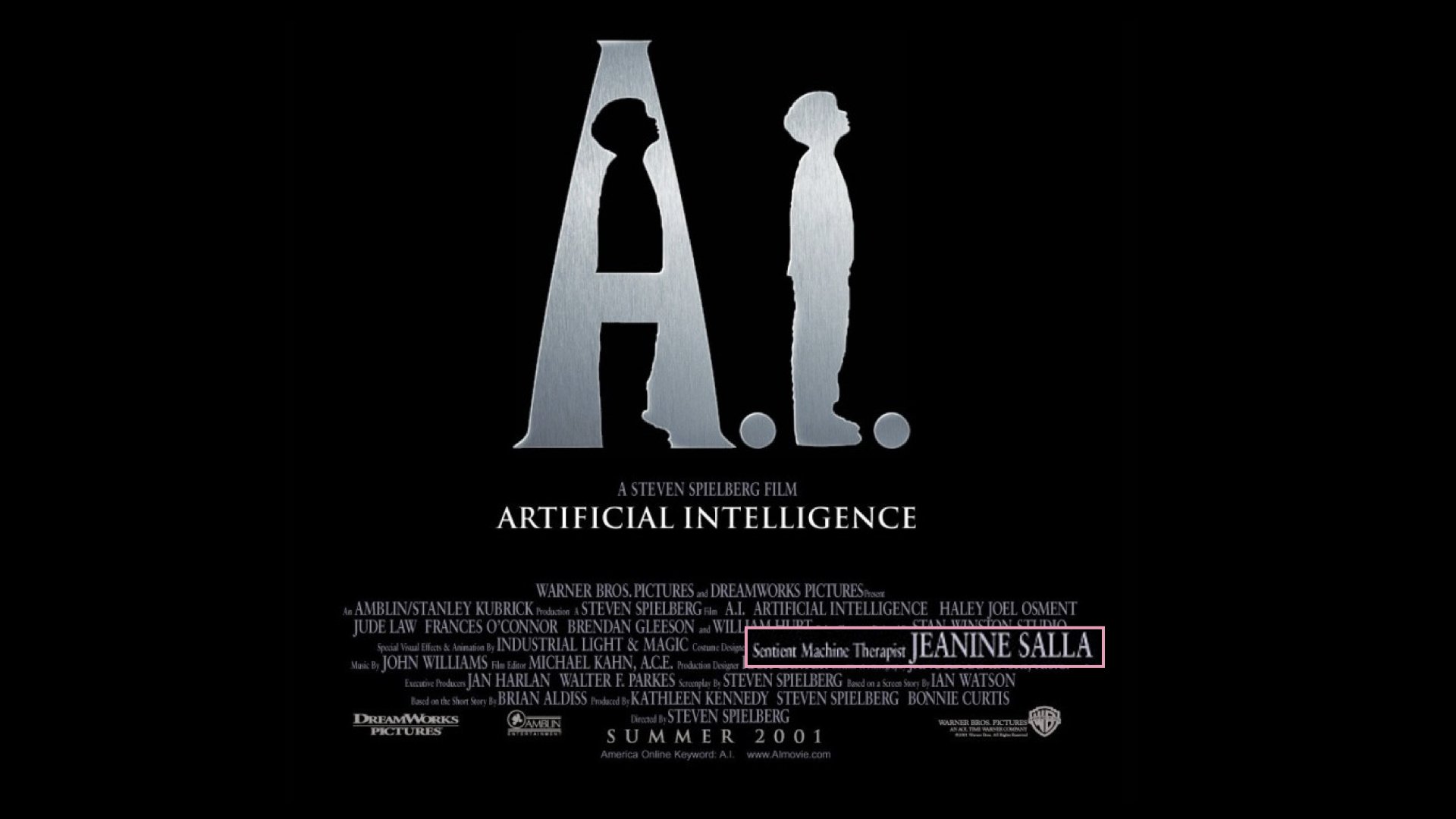

In 2001, I was working in London at a job that paid me quite a lot to do not very much. So you can imagine how quickly I was swept up in a mystery that started when the credits in the early trailers for the new Spielberg movie AI included a reference to a “sentient machine therapist”, one Jeanine Salla. Why a movie about robots would need a robot therapist was a mystery, and searching online for her name took you down a rabbithole of websites set in the future, where Evan Chan had been murdered in mysterious circumstances.

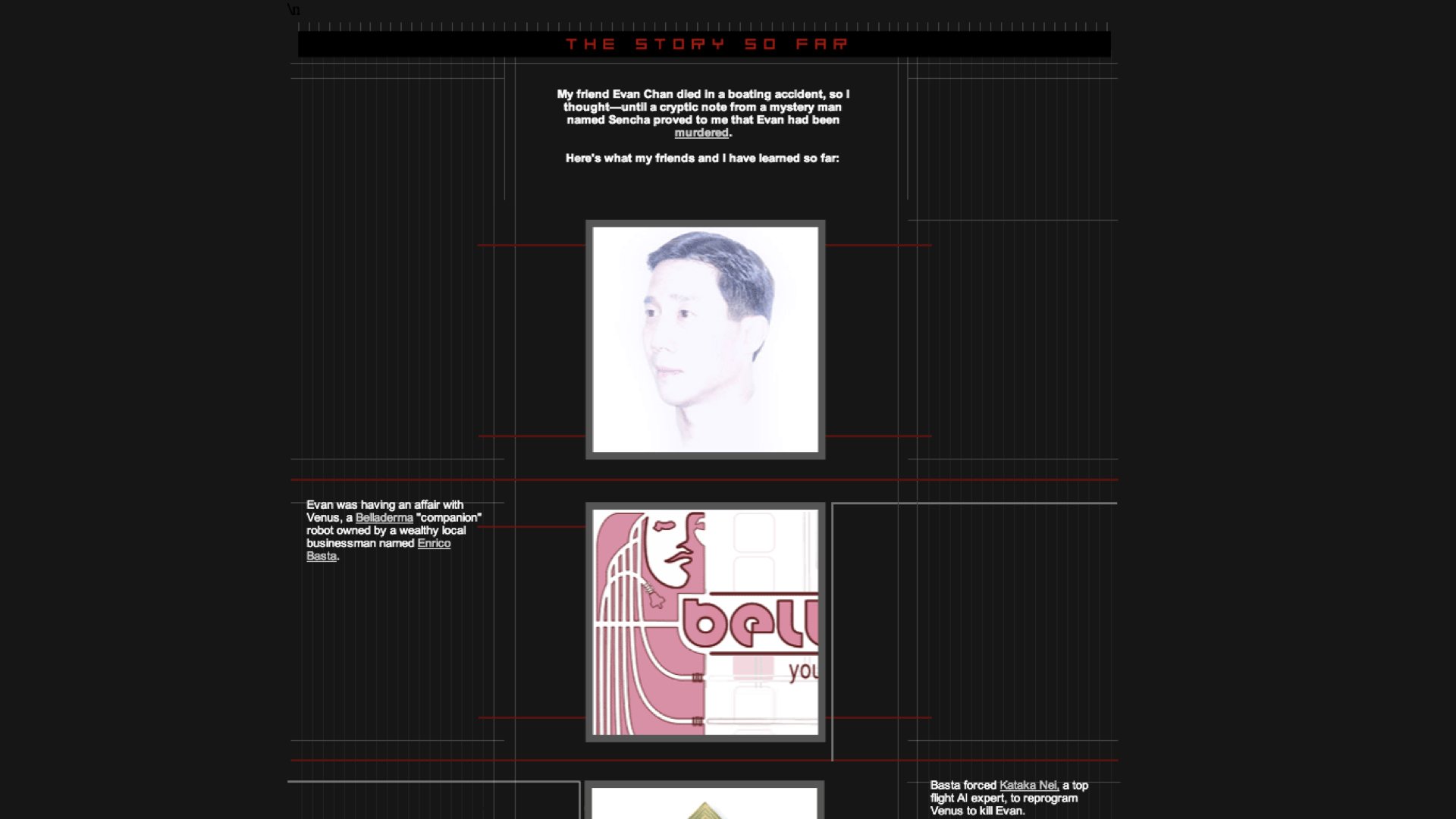

What unfolded was one of the most complex and successful Alternate Reality Games ever staged, eventually known as The Beast. Totally immersive, dozens of websites, clues that lead to phone numbers. Sometimes the game called you. Sometimes it sent you faxes. At one point live actors were involved.

But here’s the crux of why I loved it. You couldn’t do it on your own. The puzzles were too disparate, too complex. Number puzzles, language puzzles, puzzles that had to be brute-forced. You needed the benefit of the community. Including, famously, one puzzle that turned out to have been written in lute music. You can imagine how excited the sole lute-player involved in the game was that day.

We love solving a mystery.

And you can also see why that same mystery solving, bread-crumb tracking mindset is at the heart of online conspiracy thinking.

Adrian Hon, creator of ARGs notes that while QAnon is not an alternative reality game, it pushes the same buttons:

QAnon is not an ARG. It’s a dangerous conspiracy theory, and there are lots of ways of understanding conspiracy theories without ARGs. But QAnon pushes the same buttons that ARGs do, whether by intention or by coincidence. In both cases, “do your research” leads curious onlookers to a cornucopia of brain-tingling information.

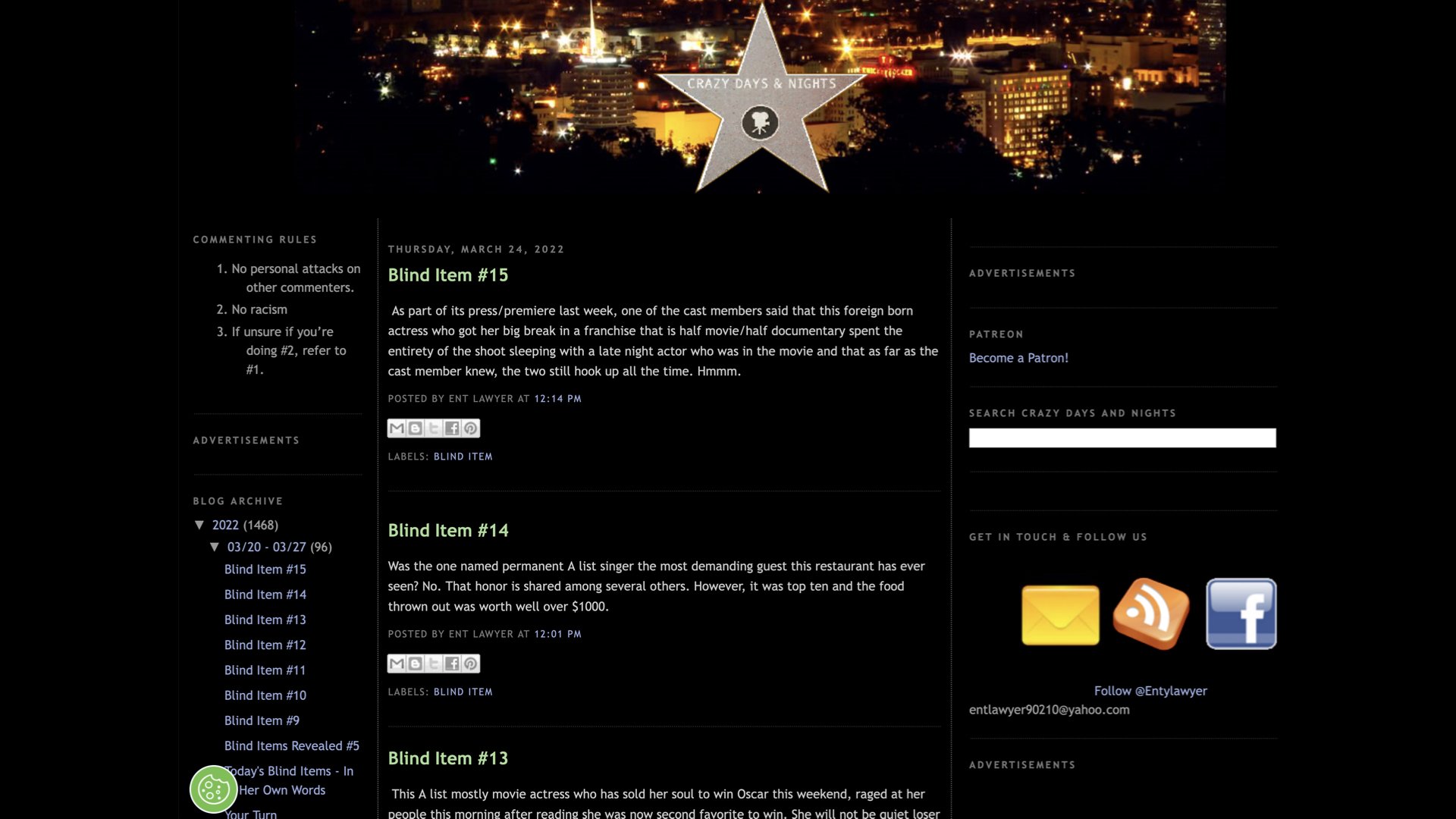

And those lines keep blurring. Crazy Days and Nights is a celebrity gossip site that posts “blind” items. Blind items are a form of gossip where the actual name of the person is left out and some of the details are obscured. For example, “This British born C list actor left his former fiancée for the then up-and-coming starlet after meeting her on set.”.

It’s another kind of game. In the comments, people post their guesses about who it might be referring to.

But recently the site has been plagued with Qanon adjacent blinds.

Again, to quote Petersen, blind item fans “think of gossip as a puzzle they can solve. When you think of the people who love QAnon, devouring those drops, that’s what they love. There’s pleasure in that analytical puzzle solving, and that translates very easily from Save the Children or Q to Crazy Days and Nights.”

It’s exactly the same thing that keeps people hooked on whether the colour of Louis Tomlinson’s t-shirt tells you anything about his relationship with Harry Styles.

And it may be part of the reason why at least one Q adherent is coming back to her family through the word puzzle game Wordle. I love this story. Her mum stopped sharing conspiracist content with her and started sharing her daily Wordle tactics. Try passing the game on to your conspiracist loved-ones today.

So we know how people stumble across conspiracy thinking – and we know why they stay – but how does it go so horribly wrong – and what is our role in all of this?

My thesis is that these things are fine when they’re at a manageable size, but all of them break at scale.

Let’s go back through each of them in turn.

Dopamine breaks at scale.

An allegedly-harmless reward structure to make us feel good about being online has given birth to whole industries of influencers and clout-chasers. A whole category of people performing for the internet.

Even after a two year experiment of hiding likes from its users, to reduce that dopamine hit, Instagram found users were still annoyed by the change - and turned them back on. You can opt out but likes now remain publicly viewable by default.

That little reward nudge that we all enjoy so much, that might be fine in small doses, morphs and grows until influence is everything.

And in conspiracy circles influence doesn’t come from rehashing the same old content and ideas – as human beings we crave novelty.

So the desire for influence, for more of that dopamine hit, it drives people to increasingly niche theories or outlandish claims. Fresh ideas; new speculation. And so we get ideas like this.

Secondly, the concept of “Purpose” breaks at scale

What starts out as a unified cause quickly draws in people with wildly differing sets of beliefs. Anything that grows quickly (and we’ll talk about that speed in a moment) doesn’t have time to build a cohesive set of norms or values.

The sense of shared purpose is lost – everyone is there for their own purpose.

And conspiracist thinking has no boundaries - no separation between one idea and the next. Join a Telegram group to discuss the fact that you’re worried about vaccinating your child and you’ll immediately find yourself awash in theories about 5G, human trafficking, voter fraud, and sovereign citizens. You may start with a clear sense of purpose, but you certainly won’t stay that way.

And thirdly community breaks at scale. In many ways, this is the most interesting one to me. We crave community but we can’t seem to sustain it over a certain size. We often talk about Dunbar’s Number - the cognitive limit to the number of people we can have stable social relationships with is around 150 people.

I don’t know for sure if that’s the right number, but I do know that we don’t seem to be capable of having great communities at enormous sizes without really hard work. And I think in large part that’s because of how we’re building them.

The way we build companies that in turn create these communities has changed dramatically over the last thirty years or so. The Silicon Valley promise was that we could throw out traditional corporate structures and job titles in favour of flat hierarchies and unlimited leave and foosball tables.

A promise of a workplace that’s something more than an office job, that’s like your family.

But these companies grow so rapidly to such incredible sizes…

…that it seems to be unsustainable.

Lately I’ve been seeing a lot of nostalgia for the earlier days of the web. The sentiment seems to be, wouldn’t it be cool if we could go back to when everyone had a blog. Back when we just shared nice photos on Flickr and kept diaries in our Livejournals. It’s playing out in a fascinating way as we start to see the rise of evangelists for what is loosely being described as “web3”.

To hear a web3 advocate tell it, “web2” is all about extractive capitalism. It’s everything that’s wrong with the walled gardens, with Facebook and Twitter, with not owning your own content, and not being paid for your creative endeavours. For those of us around in the web2.0 era, that’s a depressing way to characterise it. That’s certainly not where the idealism of web2.0 started in any event - which was about interoperability, user-owned data, open protocols. All the same things that web3 evangelists are arguing for!

But increasingly I think what we’re seeing here is not just a nostalgia for the tools and the platforms and the idealism. Nostalgia for the early web is nostalgia for a different SCALE. It’s a longing for a time when there were just fewer people online.

So, the ways in which we’re meeting our three core human needs: Community, Purpose, and Dopamine – are all fine at a manageable, human size, but they all start to collapse at scale.

And there’s one final piece to this puzzle that also falls apart at scale. The myth of neutrality.

Despite the fact that some of them now have billions of users, companies across the tech sector are clinging to the idea that the technology they build can be apolitical.

Organisations like Coinbase and Basecamp have gone so far as to contend that the companies themselves can be apolitical. That it’s possible to have a workplace that is only “mission-focussed” — as if the mission itself can exist utterly divorced from the people carrying it out. It can’t.

Newer players like Clubhouse and Substack have shot to prominence on a wave of investor funding and an absolute absence of moderation or focus on user safety. “We’re platforms, not publishers”, everyone continues to say.

And now we have the idea of the “metaverse” - Facebook wants us all to move into its budget second life knock off and hang out and have meetings and whatever – and there’s been no mention made of user safety.

It’s been almost thirty years since I last logged into LambdaMOO – thirty years since Julian Dibbell wrote his famous article “A Rape in Cyberspace” about what happened in that “metaverse”, and absolutely nothing has changed.

Hany Farid, a Dartmouth College computer-science professor who specialises in examining manipulated photos and videos said (speaking about deep fakes):

“If a biologist said, ‘Here’s a really cool virus; let’s see what happens when the public gets their hands on it,’ that would not be acceptable. And yet it’s what Silicon Valley does all the time.”

“It’s indicative of a very immature industry. We have to understand the harm and slow down on how we deploy technology like this.”

Instead literally everything is apparently a good idea.

So it’s only when the physical real-world outcomes are so extreme — a violent insurrection threatening one of the world’s largest democracies, for example — that it becomes unpalatable for companies to be associated with conspiracy theorists. Which is why you finally see Trump removed from social media platforms at the last possible moment. And why Parler loses its hosting.

And now, we’re seeing the same thing unfold in relation to the war in Ukraine. Faced with calls to take action, companies like Facebook, TikTok, and DuckDuckGo suddenly found they could in fact come down off the fence and take action. That they weren’t neutral after all.

No, hosting platforms like AWS shouldn’t be one of the lone deciding voice in all of this, but we’ve let it, because AWS itself is too big - so we get what Katie Notopolous has dubbed moderation by capitalism. Yes, there should probably be a market of alternatives. Yes, regulation should work the way it’s intended to. No-one wants Bezos and Zuckerberg to have all this power. But we let them amass it. And now we need to take it away.

We need to move away from the Valley ethos that says it’s possible to build in the absence of politics. Fred Turner said, back in 2017, “When you take away bureaucracy and hierarchy and politics, you take away the ability to negotiate the distribution of resources on explicit terms…

…And you replace it with charisma, with cool, with shared but unspoken perceptions of power. You replace it with the cultural forces that guide our behavior in the absence of rules.”

We’re seeing the impact of that everywhere. The unspoken power imbalances, the voices that are amplified and the ones that are not. The critiques that are taken seriously and the people who are ignored.

Silicon Valley removed the hierarchies, changed the job titles, levelled the company structures, and put the focus solely on the technology, and it hasn’t worked

And where does our fixation on “scale” come from?

The money.

The venture capitalist funding model that powers Silicon Valley.

For the better part of three decades now we’ve been sold this idea that the kind of hypergrowth that turns out unicorns is the only metric of “success” for a tech company, but most people don’t stop to think about why that is.

The reason is entirely to do with the way tech companies have been funded. The venture capital funding model, which relies on you burning outrageous amounts of capital to achieve hockeystick growth within a fixed timeframe to get a 10x return for your investors, so they can in turn pay out their investors. It’s absolutely artificial. And the investor class that funnels millions of dollars of funding into these companies isn’t interested in addressing any of the problems I’m talking about. Venture capitalists are by and large complicit and silent because controversy hasn’t hit their bottom line. If anything, it’s improved it.

Silicon Valley hypergrowth is a lie

I keep coming back to this piece that Lane Becker wrote six years ago now called All Human Systems are Trash Fires, in which he says, “Your company, your organization, your church, your campaign, your band, your political movement, your city, your dinner party, your revolution: At some point, you’ll look up, notice everything around you has been torched, and say to yourself, “Holy shit, this place is an enormous fucking trash fire.”

He goes on to say, “Realizing this can be revelatory. Once you recognize that all human systems are enormous trash fires, … you ask better questions about your current trash fire. Like, “Am I doing everything I can to contain this enormous trash fire, even though I know it will never go out?” … And, most importantly, “Am I surrounded by a team of firefighters or a team of arsonists?”

I think I’m more optimistic than this. I think we can build things that aren’t trash fires. But I keep coming back to this central question: “are we firefighters? Or arsonists?”

What can we do to change direction?

The reality is it’s become dehumanizing - this many people online. And so people are seeking out alternatives to the noise and the crowds and the hate.

We’re drawing back into smaller private neighbourhoods. Social discords and slacks. Group chats. Substack commenting threads. Ask people where their favourite place on the internet is and it’s going to be an obscure private facebook group about a podcast they like.

People are returning to older platforms, like Tumblr, for this very reason: Chronological, no algo, no influencers, barely any current events.

But there’s a danger in this. In us all peeling back off into small private worlds. Those worlds can lack of diversity. Lack interaction with ideas we might not otherwise encounter. They can be echo chambers.

Recently, like many countries around the world, Aotearoa had some significant anti-government protests connected with our covid response and vaccination mandates. The protestors occupied the grounds outside our parliament for several weeks.

They enjoyed very little public support (our country's eligible population is 95% vaccinated), but more than that there was almost constant pressure on the government and the police to do something to move them on. As a nation we refused to cede our public square.

What if we felt the same way about our online spaces. If we decided not to cede our public square. Not give up on the dream of the internet - what could we do?

Let’s start with the most obvious one first, and I know it’s anathema to the internet utopians in the room. But whenever humanity has invented new forms of media in the past, regulation has had to follow. Radio, television, telecommunications. It’s never been the case that we’ve said “sure, share your message with hundreds of millions of people, unimpeded” – we’ve had standards, and we’ve had licensing, and we’ve had rules. We need effective antitrust legislation that prevents monopoly power from resting in the hands of a tiny few private companies that we rely on for almost everything.

Then we need law enforcement agencies who understand the ways in which technology is weaponised, coupled with rules around hate speech and online harassment.

And as artificial intelligence and algorithms start to play an increasingly important role in our lives, we need carefully thought out frameworks for their transparent and ethical deployment. Companies shouldn’t be able to refuse this transparency, like this egregious example where Facebooks acts to remove its most widely viewed page for violating its rules, but refuses to tell anyone what it was..

These are all complex areas of law reform and policy development that can’t be knee-jerk reactions to extreme events. We need to start the work now.

Next, we need to teach Defence Against the Dark Arts.

What does media literacy look like in the TikTok era? We try to teach young people to be literate about the information they consume, to check sources and so on…

But there’s something called the Illusory Truth Effect that supercharges propaganda on social media. This holds that if you see something repeated enough times, it seems more true.

Studies show that this works on 85% of people, AND that it happens even if the information isn't plausible & even if you know better! The only thing that is proven to reduce the Illusory Truth Effect is to train yourself to check every fact the first time you see it. How many of us do that?

Couple that with the new way platforms are essentially a firehose of information that you can’t really curate (like TikTok’s For You page)…

And young people are now being bombarded with a torrent of misinformation. It’s easy to dismiss this, and you see a lot of lazy takes that amount to “well, no one should be going to tiktok for their news. But the point is no one is going to TikTok for news

They’re there already, and this is what they’re being shown.

Ryan Broderick recently drew what he called the “reverse idiot funnel” where any ludicrous theory from some conspiracy thinker is funnelled out through an increasingly predictable path of sources to mainstream spread. He also notes that it works equally well in reverse.

Tiktok is now labelling Russian state media and Twitter is doing the same.

In the UK, the government has recently released this SHARE checklist to encourage people to consider the information they are passing on.

These things are a start. But we need to start thinking about how we teach people to defend themselves.

It’s incredibly confusing if our advice is “question everything, do your own research, don’t assume anything is legit”…

…when this is exactly the path that conspiracists head down.

The third thing is that we need to kick Nazis out of the bar.

This refers to a viral twitter thread that many of you will have seen, in which someone retells the story of seeing a bartender kick out a patron wearing Nazi memorabilia.

The bartender argues that if you let one person like that stay, eventually they bring their friends, and before long you look around and your bar is a Nazi bar.

Over a decade ago, Anil Dash wrote a post entitled “If your website is full of assholes it’s your fault” in which he argued that by learning from disciplines like urban planning, zoning regulations, crowd control, we can come up with a set of principles to prevent the overwhelming majority of the worst behaviors on the Internet. And if we didn’t take responsibility for the neighbourhoods we were creating, that was on us. Ten years on, and what have we learned?

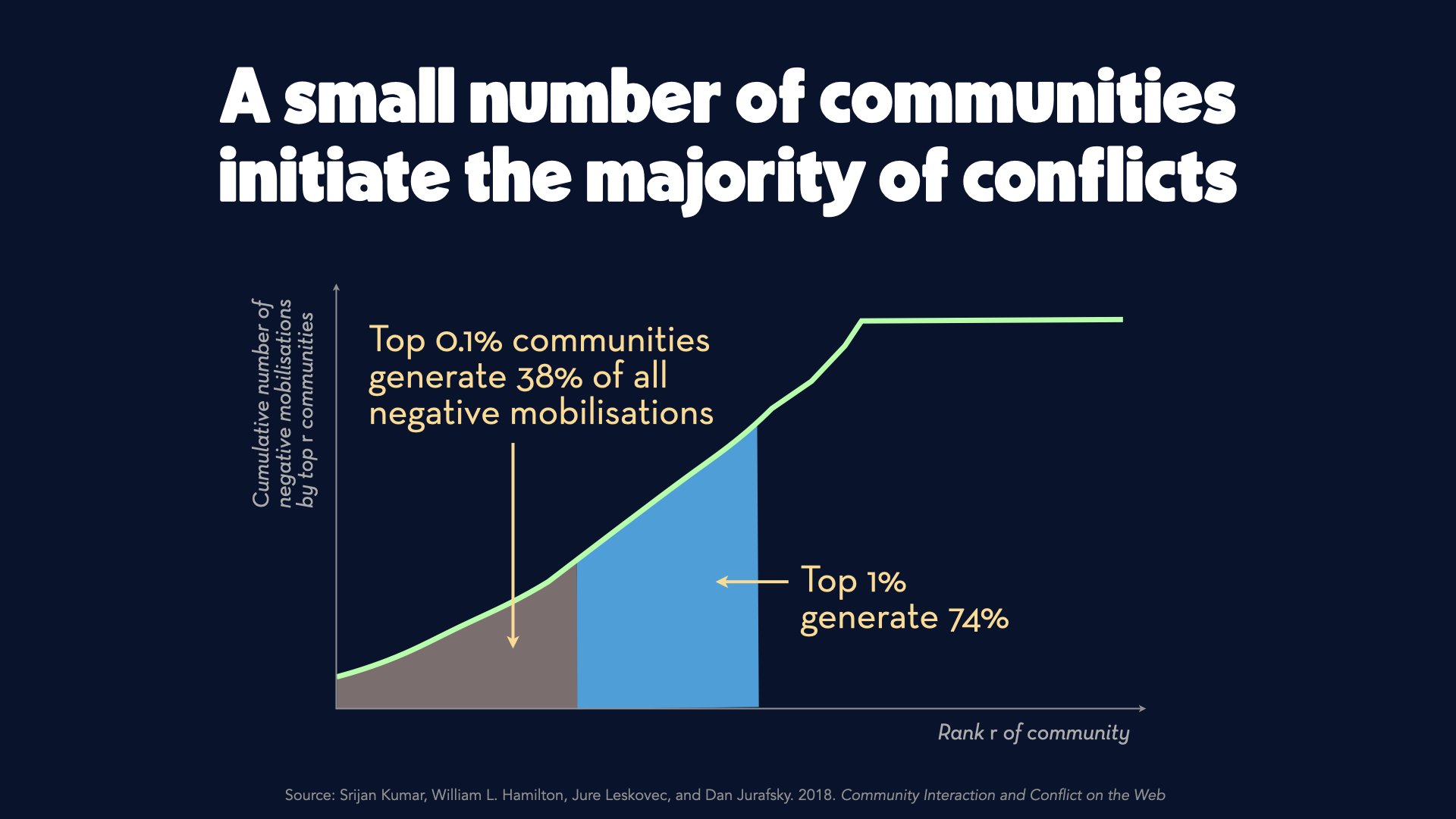

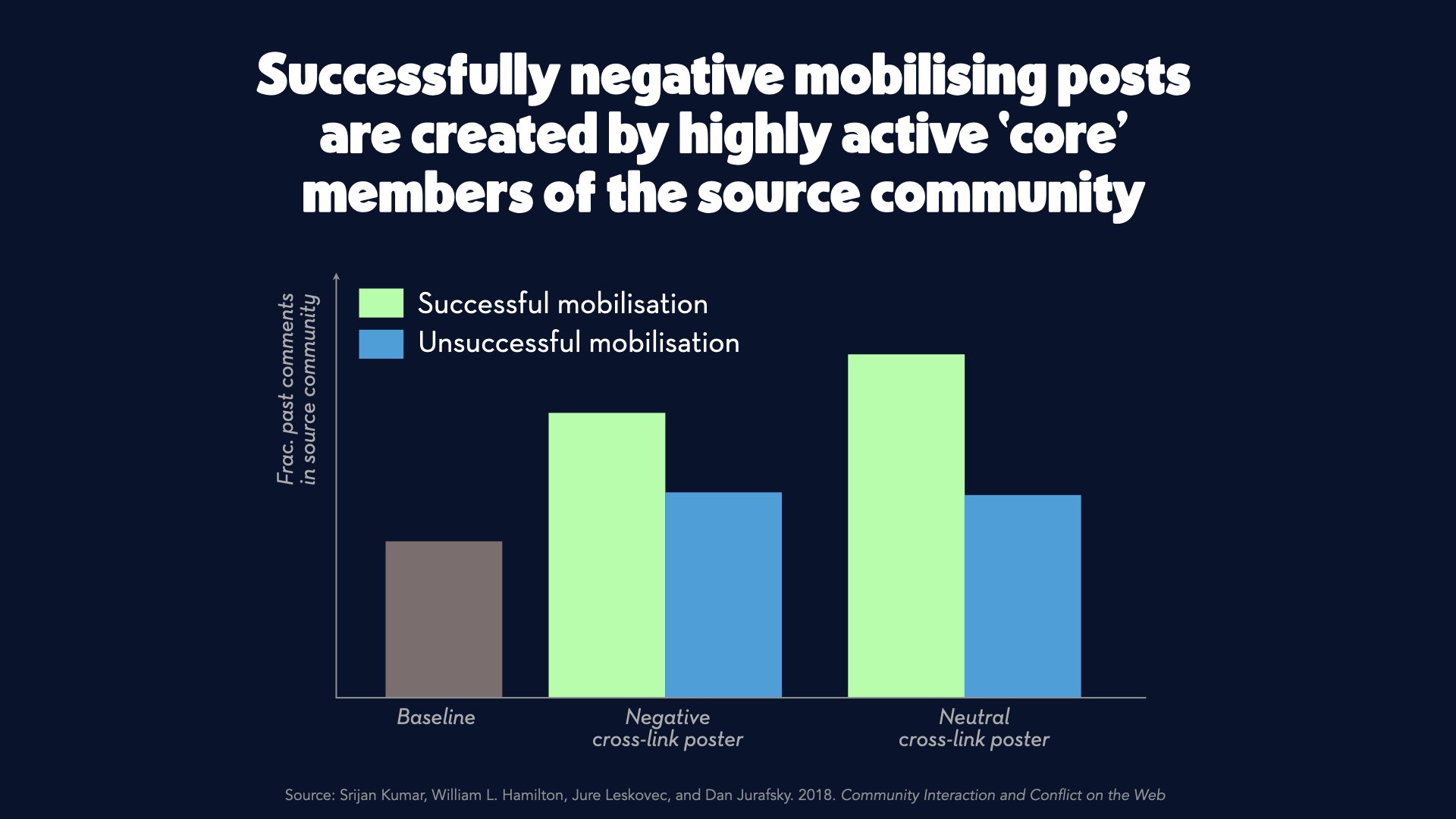

First, moderation works.

The reality is a small number of hostile users are responsible for all the bad behaviour in an online space.

And removing them works.

More than that - we used to think that there was something inherent to the anonymous online environment that lead people to behave badly. But a study published last year investigating users in both the US and Denmark considered this “mismatch hypothesis”.

The idea that humans who would be nicer to each other in person might feel more inclined to get nasty online. The researchers found little evidence for that.

Instead, their data pointed to online interactions largely mirroring offline behavior, with people predisposed to aggressive, status-seeking behavior just as unpleasant in person as behind a veil of online anonymity, and choosing to be jerks as part of a deliberate strategy.

Is real human moderation easy? No. But it turns out we can focus on a small subset of the problem and have an outsized effect:

When Telegram was banned in Brazil, one of the changes it made to have the ban lifted was to have employees focus on the top 100 channels, responsible for spreading 95% of the public posts in the country.

Similarly, we can choose to focus on the content that is going viral - throttling it to slow the spread.

We need strong, enforced community guidelines. And they should cover behaviour both in the community and in other places.

Because every community, no matter how well intentioned, will have bad actors.

Both Twitch and Discord have moved in recent months to update their community guidelines to cover off-platform behaviour. Discord says it will consider things like membership or association with hate groups.

Twitch, similarly: “We recognise that toxicity and abuse can spread to Twitch from outside our services in a way that is detrimental to our community.”

Accountable identity is more important than real names. Dismantling anonymity is not as important as creating an environment in which people are invested in their online persona.

A recent study looked at three phases of online commenting on Huffington Post: total anonymity, stable pseudonyms (where you could be anonymous to other users but not to the platform, meaning a ban for bad behaviour was more likely to stick) and real names through facebook authentication.

There was a great improvement after the shift from anonymity to “durable pseudonyms”. But instead of improving further after the shift to the real-name phase, the quality of comments actually got worse – What matters, it seems, is not so much whether you are commenting anonymously, but whether you are invested in your persona and accountable for its behaviour.

What does it look like to build a great neighbourhood – instead of trying to build a city? To, as the saying goes, “think globally, but act locally”

The reason people are returning to tumblr, a disaster site I love with my whole heart which honestly barely works, is because in many ways it’s the antithesis of everything we’ve been talking about. There’s no algorithm. Sure you can like a post but it does _nothing_. The anonymity is baked in. And good luck ever finding a post again.

They’ve just introduced the ability to pay for a sponsored post but in typical tumblr fashion there’s no targeting, no guaranteed impressions.

Just the ability to pay ten dollars to share your lizard on other people’s dashes.

As a venture capital investment - tumblr is a disaster

But as a place to hang out? It can be a delight.

What would it look like to have more places like that?

Like BeReal - the hot new app that gives you two minutes to post a photo from exactly where you are when the notification pops up - no time to stage anything for an audience.

When downloading the app, users are met with a clear warning: “BeReal won’t make you famous, if you want to be an influencer you can stay on TikTok and Instagram.”

What would it look like to start listening to and funding the people who deeply and genuinely understand the problems we’re facing?

For example, Tracy Chou founded Blockparty after suffering years of online harassment as a set of tools that should (and could) have been baked into Twitter from the very beginning.

We shouldn’t need third party apps to do this work. We should be building our neighbourhoods with these tools in their very foundations.

What would it look like to build slowly rather than at breakneck pace?

Clive Thompson wrote recently about a study into the ways Wikipedia covered COVID-19.

At a time when mis and disinformation was running rampant, you’d expect that Wikipedia would have suffered the same fate.

But the study found that actually it maintained a high standard of reliable information – and the way it did that was interesting. Relying not only on legacy sources, but old sources. Editors didn’t rush to the new and the shiny.

A few years ago at Webstock, my dear friend Natasha Lampard told the story of an onsen in Japan- the oldest company in the world - that has been operating for over 1300 years. Fifty two generations of the same family. She reflected on our venture capital-funded focus on exit strategies. And she wondered if the family that owns this onsen thought the same way.

I imagine they focused, not on an exit strategy, but on an exist strategy, a strategy built on sticking around; a strategy not for a buy-out, but for a handing down, a passing along.

As Tash said, “We can choose to remain small. We can choose to devote ourselves to something, and to those we serve. We can choose to do our small things in small ways and which, over a period of time, can build upon themselves.”

What does it look like, for example, to take a digital walk together with friends - to share the things we love online, not for clout or influence or likes or clicks?

Sharing our favourite things is a love language. Sitting around in front of the tv showing each other our favourite clips on youtube. Our platforms and social infrastructure should feel like that.

Sometimes the best strategy might be not building something at all.

Sometimes our greatest contribution might be choosing not to do something.

One of the most striking findings coming out of the pandemic is that the countries that fared best were the ones with the highest levels of trust - in government and in one another. A recent study in The Lancet looked at data across 177 countries.The researchers tested everything they could think of for predictive power. They looked at G.D.P., population density, altitude, age, obesity, smoking, air pollution, and more.

What they found was that moving every country up to the 75th percentile in trust in government — that’s where Denmark sits — would have prevented 13 percent of global infections. Moving every country to the 75th percentile of trust in their fellow citizens — roughly South Korea’s level — would have prevented 40 percent of global infections.

That trust and reciprocity brings us full circle to the things Putnam argued we lost back when he wrote about us bowling alone. Our online neighbourhoods need this built into their foundations, not consistently undermined.

There is no magic wand that will make people trust one another, work together, feel comfortable with one another and welcoming to newcomers. We have to work at that.

And I firmly believe that the networks and spaces that we’re part of that are healthy and inclusive and happy places to be, have to show up the places that are toxic and broken to the point that people won’t want to be a part of them any more.

What if the good neighbourhoods we choose to build can influence the bad.

Ayesha Siddiqi wrote recently about the things we’ve gained from social media.

“I think of people who made it possible for sexual violence to have social consequence. I think of the students using Discord to organize school walkouts. I think of all the people getting help through therapists posting on Instagram and life coaches on Tiktok. Sure, the quality varies, but that’s true of the healthcare system too. ADHD and autism is under-diagnosed in girls and people of color. These individuals have been better able to access life improving guidance online. Some of the best culture writers today came up on tumblr and Twitter; we would’ve missed out on so many valuable perspectives without them.

How do we meet these needs of expression, connection, knowledge production and entertainment.

How can we rise to this challenge? How can we each choose to build not for money or for power or for scale, but in the public interest?

The thing I love most about the internet is its ability to bring us together. Vibrant communities of engaged people connecting around the things they love. I’m so passionate about fans and fandom because, at its best, it lets people find companionship and support, and be creative and share their work. And the tools and the platforms and the organisations that we build make this possible. So it’s up to us to save it, to dig out the toxicity and the hate built into the very foundations. To put the focus back on people and away from the engineering solutions. To stop leading people down rabbitholes. To stop leading them anywhere, really. And to find a way to celebrate being in community with one another all over again.

With thanks to Nozlee, Jes, Luce, Rochelle, Lillian, Helen, Rob and Carl — and as ever to Su Yin for doing such beautiful work (under trying conditions) with these slides.